Petal Crash Behind the Scenes: Artificial Intelligence for Puzzle Game CPU Players

As I sit down to start writing this, it’s evening on the launch day of Petal Crash for PC, Switch, and PS4, a retro-style action puzzle game created by my friend Ashley Jenkins and contributed to by several others, including myself. Ashley and I spent a good chunk of today bouncing around different places online looking for reactions to the game, and something that people were surprisingly interested in when we joined conversations was how the AI that powers the CPU opponents works. This was my most major contribution to the game, and it’s something that I am pretty proud of, so I thought it would be worth trying to write an article on the design of the system and how it’s used in the game to give each CPU character a unique playstyle that fits their personality. Note that I may elide or simplify some things for the sake of brevity, or even get some small details wrong since we did the bulk of of early development together in person so I have nothing but my poor memory to reference. I’ll try to explain game-specific vocabulary where I can, but an understanding of basic Petal Crash gameplay is probably required too.

Beginnings

At the time I got involved in the project, Ashley had been working on Petal Crash for about half a year. Before the Kickstarter campaign, a private build of the puzzle editor mode was shared with a small group of friends, and seeing the puzzle codes being exported in what was basically a raw serialization of their internal representation got me thinking about the problem of compressing those codes for ease of sharing, specifically making them small enough to fit in a tweet, or even encode in a QR code (an idea that ended up never making it off the drawing board). The details of that are another topic entirely, but writing the code to compress and decompress puzzles into tweet-sized chunks was how I first started officially contributing to Petal Crash.

Shortly after that, the Kickstarter was launched and successfully funded, and Ashley kept working on the game for several more months. At one point, Ashley mused in private that developing the AI for the game was going to be a nightmare to tackle, which got the gears turning in my head once again. I started talking to Ashley to get a better idea of what was needed, and after we decided to tackle the problem together, we came up with several key features that we wanted CPU players to have:

- Unique playstyles that suit each character’s personality without any two characters feeling the same to play against

- High enough max difficulty to challenge expert players

- Low enough minimum difficulty to accommodate inexperienced players who might have never played an action puzzle game before

- Short enough required “thinking time” that moves could be made at a reasonable pace

Making a Plan

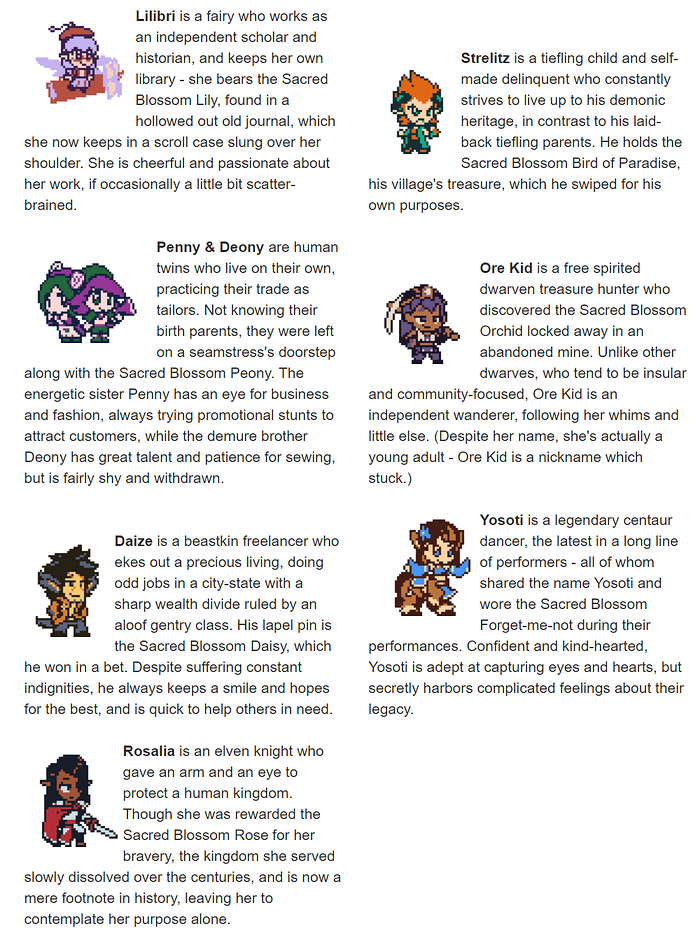

To understand the decisions that went into the design of the system, one first needs to understand the most important requirement, which was being able to give each CPU player a unique personality. As seen below, each character has a well-defined personality, and each character’s story mode consists of competing with each of the others in a match, so there’s a lot of room for those personalities to shine in both the writing and gameplay.

Here’s a list of the final CPU behaviors adapted from the patch notes sent out to beta testers when the feature went live, which I don’t think really changed much at all from Ashley’s original design throughout the process aside from small tweaks to parameters for difficulty adjustment reasons that I’ll discuss later.

- Lilibri: Makes deliberate, well-planned moves, but panics when hit with attacks.

- Strelitz: Makes moves quickly, without much forethought, as if he’s button-mashing in a fighting game.

- Penny & Deony: Switch between a faster, haphazard playstyle and a slower, more thoughtful playstyle every 15 seconds to represent the twins’ differing personalities.

- Ore Kid: Uses the skip button to fill her field mostly full of pieces, then chips her way out like she’s mining. She’s not good at recognizing chain opportunities but will sometimes luck into them.

- Daize: When in danger, will focus on setting up big moves without setting them off and will set off prepared moves once the hit is resolved to counter the incoming garbage blocks.

- Yosoti: Plays slightly slower, but is better at recognizing and preparing chain clears.

- Rosalia: Takes her duty seriously and does not hold back or show weakness, so no gimmick or behavior changes from default optimized AI.

With a clear set of goals defined, I then set about figuring out how exactly to build all of this. I tried to do some preliminary research and see if anyone had written any articles similar to this one about their own games, but didn’t come across much (which makes sense since not many people are making games in a genre whose heyday was two decades ago). I’m sure something must exist out there, but I already had an idea that I was pretty sure would work and mostly just wanted to make sure there wasn’t some vastly greater well-known option I was overlooking, so I didn’t spend too much time doing further research.

Building the Underlying AI

My background is in computer science, so of course the very first approach that came to mind when dealing with this problem was a search tree with some sort of scoring function to evaluate potential future states, as is commonly used in AI for traditional turn-based games like chess. After evaluating potential future states and finding the one with the best score according to the state-scoring algorithm, the move for this turn that leads down the desired path is selected. Coincidentally, a Petal Crash board is the same size as a chess board, and the fact that Petal Crash is effectively turn-based made this approach a perfect fit. From there, it was a matter of determining what the scoring function should look like, and what parameters it needed. Taking the CPU personalities into account, we arrived at the following parameters:

- Search depth: How deep to search in the possibility space. The algorithm goes through every possible move that could be made from the current state and scores it, then each potential state that could follow has its ancestors’ scores factored in. This continues until it hits the search depth limit. The move that leads down the path to the state that gets the best final score wins. Search depth allows us an amount of control over how smart the AI is, but most importantly allows us to stop it from searching too deeply and using too many resources or taking too long to make a decision.

- Block clear weight: Adjusts the importance of number of blocks cleared by a given move.

- Chain size weight: Adjusts the importance of chain size (chain-reaction clears of multiple clusters of blocks from the same input).

- Chain exponent: An exponent to use on the portion of the score calculated from the number of blocks cleared, the chain size, and their respective weights. Usually this is 1, but for characters that really want to focus on chains, this can be increased.

- Garbage clear weight: Adjusts the importance of removing garbage blocks from the board. Allows characters to go out of their way to clean up garbage or not pay any particular attention to it depending on tuning.

- Search depth weight: A somewhat ambiguously-named parameter that is used to weight moves further down in the search tree. For example, setting this value to something higher than 1 will result in the AI heavily favoring setting up moves for the future instead of making a match as the current move, while setting it to something between 0 and 1 would make each subsequent search level contribute less and less to the final score for its search path, effectively making the CPU “distrustful” of its ability to judge the future.

- Skip weight: “Skipping” and “fast-forwarding” are terms we use interchangeably to refer to the feature that allows the player to automatically advance block spawners until the next set of blocks spawns in. Otherwise, these advance one step per move made by the player. Skip weight influences how likely the CPU is to select skipping as their move. This is used extensively by Ore Kid to create her playstyle where she fills her board with pieces and chisels her way out.

- Noise weight: A weight for how much to “fuzz” a given score, obscuring to the AI how good it truly is. The calculation this controls can end up not only making some moves look worse than they really are, but making some look better than they actually are too. Effectively, this is a number between 0 and 1 that linearly interpolates from an unfuzzed score (0) to a score heavily distorted by multiplication by a potentially small or potentially large random number (1).

- Block clear cap and chain size cap: Maximum values for the amount of block clears and the size of a chain that will be factored into a score. Anything that surpasses these values will be clamped, which means that characters that aren’t supposed to be good at spotting chains can be configured to not be able to tell the difference between, for example, a 3-chain and anything larger. These were added after the original version to address some issues detailed below.

Putting It All Together

The AI system takes an input consisting of a representation of the current board state plus all of these parameters, simulates and scores future board states, picks a winner, and outputs the block position and direction to move it in, which is then fed to the code that controls the AI player’s movements. The parameters are not set in stone, and can change due to other factors in the match. For example, when Penny and Deony flip who’s at the controls, the set of parameters fed to the underlying AI algorithm changes completely. When Daize sees a big attack coming, he can switch modes and ask the algorithm for a move that he can take now to counter it instead of continuing with his normal build-for-the-future approach. Some behaviors are achieved without modulating AI system parameters at all, such as when Lilibri gets hit by a big attack; she can ask for her next move, and then sit on that information for a certain amount of time while wiggling her cursor around wildly to demonstrate her distress before actually making the move. With the AI system plus additional code on top to modulate the parameters and perform other actions, Ashley was able to successfully create all of the desired AI personalities, which was a really exciting success the first time we saw it all wired up in game!

So we met our goal of enabling unique playstyles, but what about the difficulty range? When tuned correctly and without any other limiters on it, the AI was frighteningly good, given its ability to make “perfect” decisions (within the limitations of its allowed search depth). In fact, even the maximum normal difficulty in the final game still has some amount of limiters on it, so a high difficulty skill ceiling was definitely reached. (Bonus easter egg: the “limiters off” mode is accessible in VS CPU mode by setting your difficulty to 10.0 then pressing right again 5 times until the difficulty indicator becomes 💀. Even the best player in the beta testing group claims to only be able to beat this only once in every few dozen attempts. The only limitations placed on it in this mode are on search depth since that needs bounds for performance reasons, and there is an enforced minimum time between moves, though it is short.)

With high-end difficulty checked off our list, what about low-end difficulty? Difficulty is rated in-game on a scale of 1.0 to 10.0 in increments of 0.1, and while the selected difficulty level affects other things like the delay between each move the CPU makes, it’s also directly used to calculate how large the score noise weight should be. All the other parameters that control a CPU’s personality can stay the same, but this noise can be amplified based on how easy they’re supposed to be in order to make them more likely to “make mistakes” or otherwise not see moves that the rest of their parameters would define as “optimal”. Even at low difficulties, good moves can still break through the noise so your opponent isn’t totally dead in the water, but it keeps the AI under control in a way that we found scaled very well with the numeric difficulty system once the relationship between the two numbers was dialed in.

Finally, the last requirement was that the AI not be too expensive. We needed a search depth of at least 2 or the CPUs would have no foresight whatsoever, and we found that a search depth of 3 was very strong, but could cause performance bottlenecks on some machines. The compromise we tried to come to was adding another parameter to the AI call that determined the probability of whether the AI would be allowed to look an extra layer deeper for a given call, so that sometimes the AI can take an extra moment to think and make a better move, if certain other requirements are met that we deem worthy of looking deeper beyond. Specifically, this has a chance of triggering if it looks at a search depth of 2 and the best move it sees scores below a very low threshold in order to try and avoid making a bad move, or if the move would set off a chain, to try to avoid setting off a chain that might be better in the future if we took a different action this turn. Unfortunately, even this ended up not meeting our performance requirements on machines with limited computing power, so we disabled the feature for all standard difficulties. For the secret 💀-difficulty AI, since it’s an easter egg and we didn’t have to worry so much about it impacting regular gameplay if performance issues were encountered, this is enabled, but on certain devices the impact on game performance is indeed noticeable and it’s best played on a PC with processing power to spare.

While we would have liked to have had a search depth of 3 available during normal gameplay, the amount of work required to calculate the board state after a given move is extremely nontrivial compared to something like making a chess move, so searching the probability space is much slower, and the exponential growth of moving a layer deeper is just too much. Given more time and effort, we may have been able to overcome these technical difficulties, but we decided that since search depth 2 was still able to provide a very fun and challenging experience, there was no real practical reason to pursue it further since our goals were met. Since the CPUs run the entire AI algorithm every turn, they’re constantly reevaluating their current course of action, so even planning just one extra turn ahead allows for the possibility of using many moves to set up large chains if it keeps seeing new components that it could add to the chain with a single move before setting it off. Part of Ashley’s mentality for actually shipping games is to stop fussing with already good things in search of some perfect ideal, so I was able to accept that depth 2 was enough and let it go. Still, if Ashley ever gets to make Petal Crash 2 and I’m brought back on for AI, I’d love to challenge myself to make it even more bonkers next time around.

Post-Implementation Tuning

After the first build with story mode went out to beta testers, the AI got its first real workout and we discovered a few issues with the parameters we’d initially set. For example, Yosoti’s personality is that they make their moves more slowly but they set up big chains. It turns out that even on lower difficulties, they were a little too good at making chains, and they were becoming so appealing to the AI algorithm that they were breaking through the fuzzing and getting picked fairly consistently. This is when we introduced the block clear and chain size cap parameters to try and limit Yosoti’s ability to tell huge chains from just-okay ones, and this coupled with some tweaks to their other parameters brought their reign of terror to an end.

Another problem character was Ore Kid. At the time, the algorithm for determining where to place new block spawners was fairly naive, and would choose from open locations at random. Since there are only 5 colors and limited board space, this often led to a piece spawning in adjacent to a piece of the same color, meaning if you skipped a lot, you often got fodder for basically-free combos. Even though Ore Kid’s parameters weren’t tuned for her to seek out combos specifically, so many would end up falling into her lap due to the luck of the spawning algorithm that she would often stumble into huge combos. To a certain degree, this was an expected part of her playstyle, but it turned out to be just a little too much. After this, she also had the max combo cap applied which reduced her power a little bit, but since she was getting those huge combos on accident anyway, her power wasn’t truly reined in until a later patch which revised spawner placement algorithm to try to avoid placing spawners adjacent to blocks of the same color to discourage spamming skipping as a “cheap” strategy for human and AI alike.

Final Thoughts

Overall, I’m really happy with how the AI in Petal Crash turned out. Ashley’s concepts for personalities-as-playstyles really shines through, and the difficulty curve feels really satisfying. Due to the computational requirements specific to Petal Crash where a single move can have cascading consequences, I wasn’t able to make the max level quite as ruthlessly bloodthirsty as I might have liked, but it still provides a challenge that I think will be stumping even the strongest players for a long time, so I can live with that.

Hopefully this was interesting to those who care about this kind of stuff. Don’t forget to play Petal Crash, and thanks for reading!